Human-Robot Interaction for Assisted Object Grasping by a Wearable Robotic Object Manipulation Aid for the Blind

Abstract

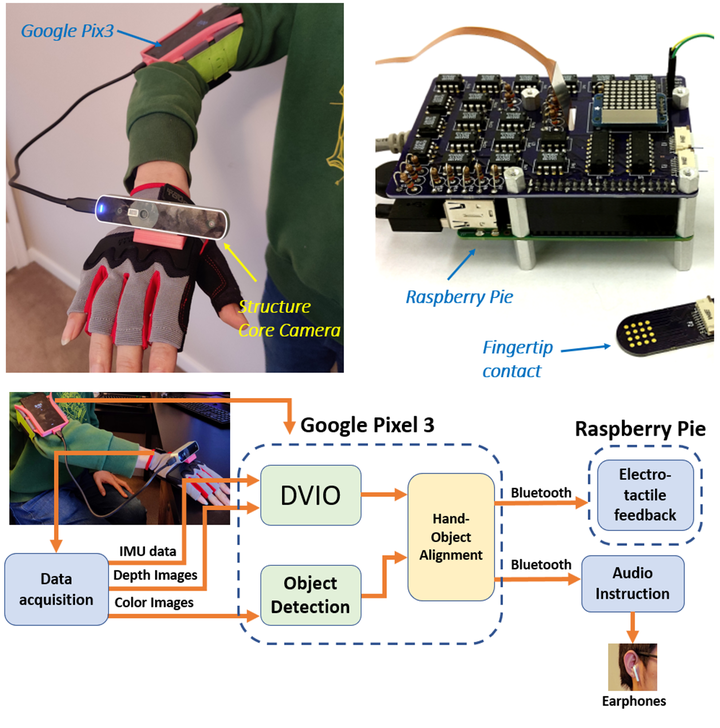

This paper presents a new hand-worn device, called wearable robotic object manipulation aid (W-ROMA), that can help a visually impaired individual locate a target object and guide the hand to take a hold of it. The device consists of a sensing unit and a guiding unit. The sensing unit uses a Structure Core sensor, comprising of an RGB-D camera and an Inertial Measurement Unit (IMU), to detect the target object and estimate the device pose. Based on the object and pose information, the guiding unit computes the Desired Hand Movement (DHM) and convey it to the user by an electro-tactile display to guide the hand to approach the object. A speech interface is developed and used as an additional way to convey the DHM and used for human-robot interaction. A new method, called Depth Enhanced Visual-Inertial Odometry (DVIO), is proposed for 6-DOF device pose estimation. It tightly couples the camera’s depth and visual data with the IMU data in a graph optimization process to produce more accurate pose estimation than the existing state-of-the-art approach. Experimental results demonstrate that the DVIO method outperforms the state-of-the-art VIO approach in 6-DOF pose estimation.