A Visual Positioning System for Indoor Blind Navigation

Abstract

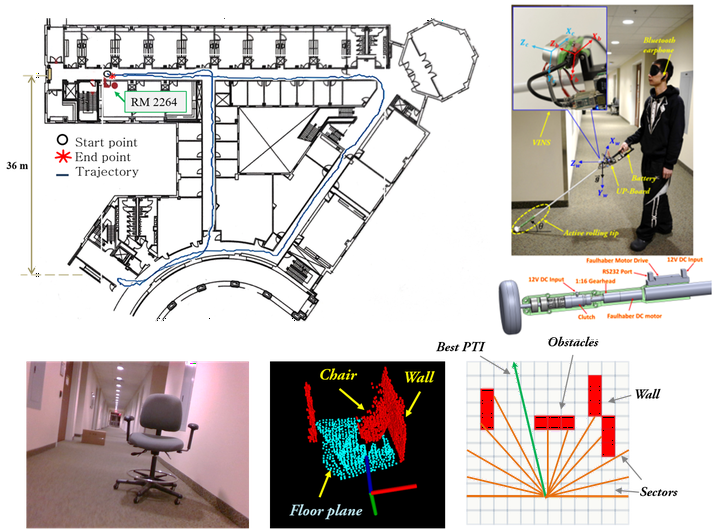

This paper presents a new visual positioning system (VPS) for real-time pose estimation of a robotic navigation aid (RNA) that is used for assistive navigation of a visually impaired person. The backbone of the VPS is a new depth-enhanced visual-inertial odometry (DVIO) that uses an RGB-D camera and an inertial measurement unit (IMU) to estimate the 6-DOF pose of the device. The DVIO method extracts the geometric feature (the floor plane in this work) from the camera’s depth data and integrates its measurement residuals with that of the visual features and the inertial data in a graph optimization framework for pose estimation. A new measure based on the Sampson error is introduced to describe the measurement residuals of the near-range visual features whose depths (measured by the depth camera) are accurate and that of the far-range visual features whose depths are unknown. The measure allows for the incorporation of both types of visual features into the graph optimization. The use of the geometric feature and the Sampson error improve pose estimation accuracy and precision. The DVIO method is paired with a particle-filter-based localization (PFL) method to localize the RNA in a 2D floor plan and use the information to guide the visually impaired person to reach the destination. The PFL reduces the RNA’s position and heading error on the horizontal plane by aligning the camera’s depth data with the floor plan map. Together, the DVIO and the PFL allow the VPS to accurately estimate the pose of the RNA for wayfinding and meanwhile generate a 3D local map for obstacle avoidance. Experimental results demonstrate the usefulness of the RNA in wayfinding and obstacle avoidance for the visually impaired in indoor spaces.