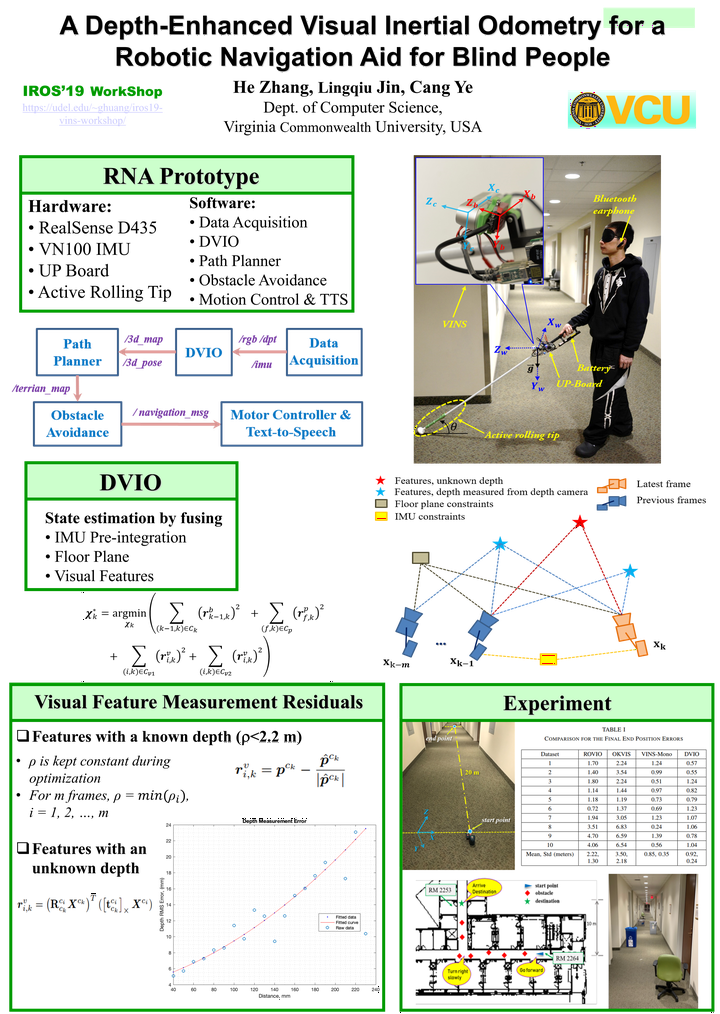

A Depth-Enhanced Visual Inertial Odometry for a Robotic Navigation Aid for Blind People

Abstract

This paper presents a new method, called depth-enhanced visual-inertial odometry (DVIO), for real-time pose estimation of a robotic navigation aid (RNA) for assistive wayfinding. The method estimates the device pose by using an RGB-D camera and an inertial measurement unit (IMU). It extracts the floor plane from the camera’s depth data and tightly couple the floor plane, the visual features (with depth data from the RGB-D camera or unknown depth), and the IMU’s inertial data in a graph optimization framework for 6-DOF pose estimation. Due to use of the floor plane and the depth data from the RGB-D camera, the DVIO method has a better pose estimation accuracy than its VIO counterpart. To enable real-time computing on the RNA, the size of the sliding window for the graph optimization is reduced to trade some accuracy for computational efficiency. Experimental results demonstrate that the method achieved a pose estimation accuracy similar to that of the state of the art VIO but ran at a much faster speed (with a pose update rate of 18 Hz).